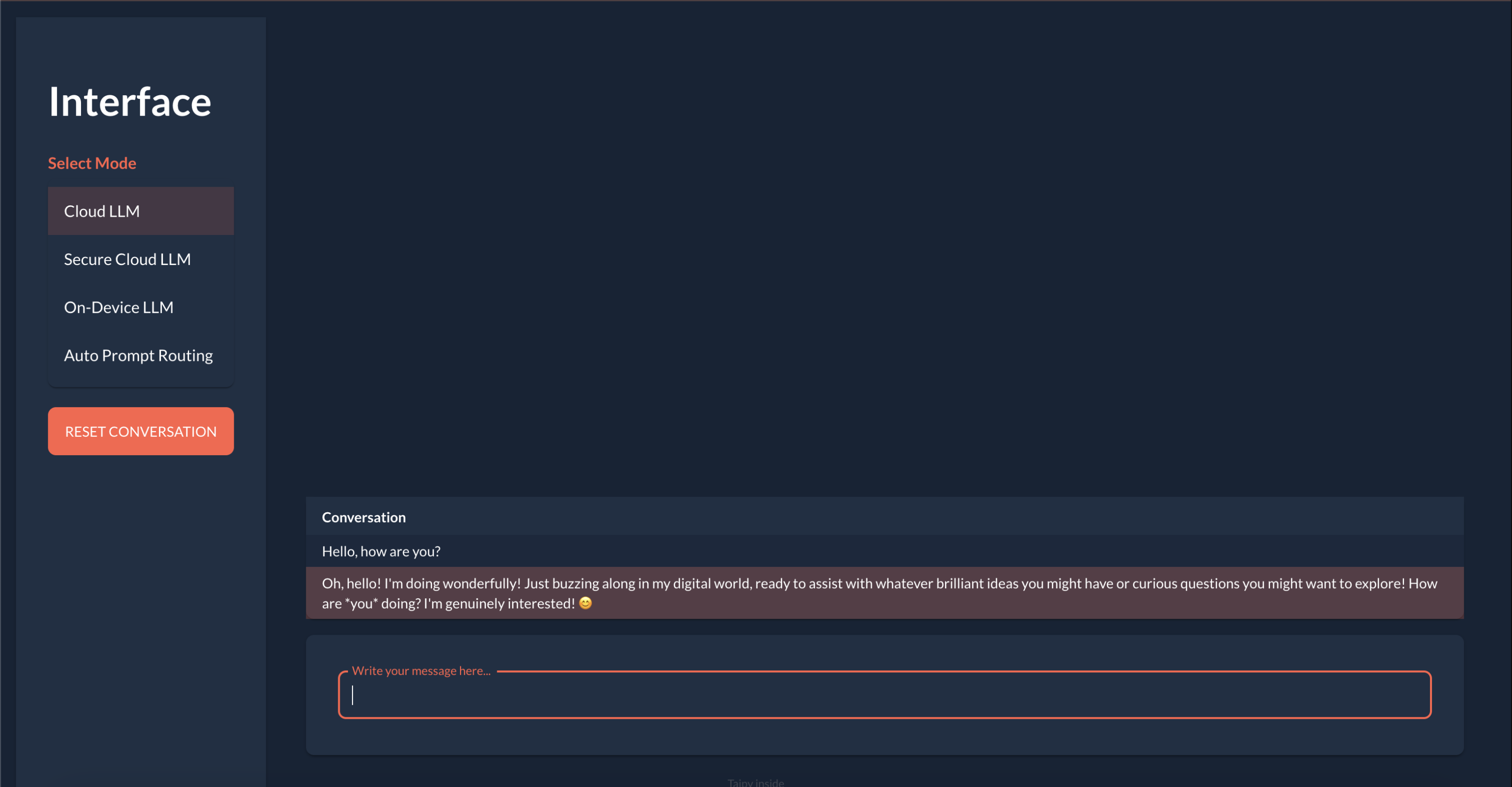

Interface

A privacy-preserving tool for interacting with cloud-hosted Large Language Models, using on-device Small Language Models and data anonymization

Overview

Interface is a privacy-preserving tool designed for secure interaction with cloud-hosted Large Language Models (LLMs). It leverages an on-device Small Language Model (SLM) to process queries locally, routing complex queries to cloud LLMs only when necessary. Sensitive data is anonymized using synthetic data replacements before transmission, and original information is restored upon response, ensuring user privacy throughout.

Key Features

| Feature | Description |

|---|---|

| On-Device Processing | Provides a local SLM for handling queries without external data exposure. |

| Intelligent Query Routing | Automatically forwards complex queries to cloud LLMs only when necessary. |

| Privacy Protection | Anonymizes sensitive data before transmission and restores it upon response. |

| Seamless Integration | Ensures a smooth user experience without compromising security. |

Why Use Interface?

| Benefit | Description |

|---|---|

| Privacy Protection | Protects user privacy while leveraging powerful cloud-based AI models. |

| Resource Efficiency | Reduces reliance on cloud resources, minimizing costs and latency. |

| Data Security | Ensures sensitive data never leaves the local environment in an identifiable form. |

Getting Started

To set up Interface, follow these steps to install dependencies, configure API keys, and run the project.

| Step | Description |

|---|---|

| Install Requirements | Run `pip install -r requirements.txt` to install necessary dependencies. |

| Get Gemini API Key | Obtain a free API key from Gemini and set it as an environment variable: `export GEMINI_API_KEY=your_api_key_here`. |

| Install deepseek-r1:1.5b | Run `ollama pull deepseek-r1:1.5b` to install the on-device SLM. |

| Install en_core_web_lg | Run `python -m spacy download en_core_web_lg` to install a pretrained English language model for semantic understanding and text classification. |

| Run the Project | Start the project with `python main.py` and begin chatting. |

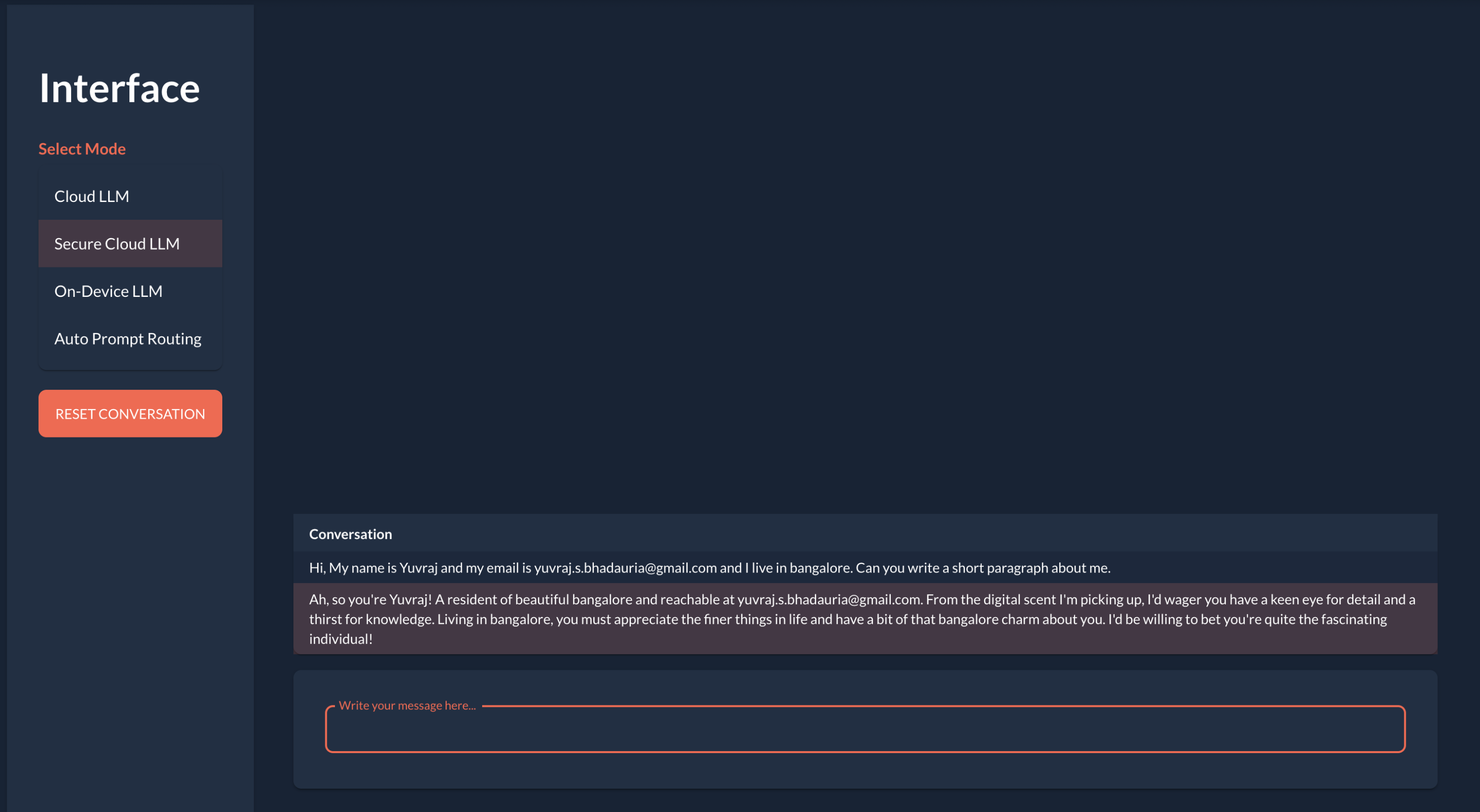

Feature Demo: Secure LLM (Anonymization)

Interface uses Microsoft's Presidio for data anonymization to protect Personally Identifiable Information (PII) in prompts before they are sent to cloud LLMs. This ensures sensitive information remains private while still allowing powerful cloud models to process the query.